Introduction

Before using this guide the following information may be useful:

- If you want a general introduction to Artificial Intelligence, check out our guide on Artificial Intelligence for Undergraduate Students.

- Remember, you should never pass off AI generated work as your own and your professor decides if AI use is permitted in their class. Read the Guidelines for Using AI Tools in Academic Assignments for more details.

What is a prompt?

In generative artificial intelligence models, a prompt refers to the textual input supplied by users to direct the model’s output.

Basic prompts can be as simple as asking a direct question or providing instructions for a specific task. For example, with image generation models like DALL·E 3, prompts are often descriptive, while in large language models (LLMs) like ChatGPT-4 or Gemini, they can be simple queries. Advanced prompts involve more complex structures, such as "chain of thought" prompting, where the model is guided to follow a logical reasoning process to arrive at an answer.

Why do we need to write prompts?

Prompts are the primary way to communicate intentions, context, and goals to a language model. Unlike search engines or a library database that return existing documents, an AI generates responses based on your input. It is creating the text rather than retrieving content.

How does it do this? Through a process called tokenization.

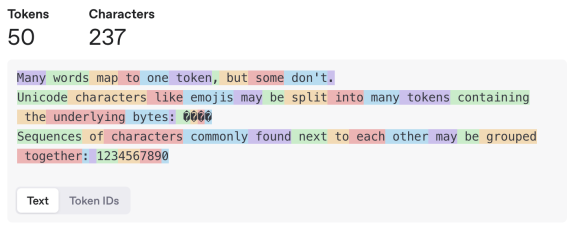

Tokenization plays a foundational role in how artificial intelligence understands and processes human language. Imagine you're trying to teach a computer how to read. Before it can truly grasp meaning or respond intelligently, it first needs to break down the language into pieces it can manage. This is what tokenization does — it slices text into smaller units called "tokens" that the AI can analyze and work with.

These tokens are often words, but not always. Sometimes a token is a part of a word, like "un" in "unhappy", or even a punctuation mark. This breakdown allows the AI to process language more efficiently because it no longer has to consider an entire sentence as a single, overwhelming chunk.

Here is an example of tokenization from the ChatGPT tokenizer.

By converting language into a sequence of tokens, AI models can learn patterns, relationships, and context based on how tokens commonly appear together. For instance, in the phrase "machine learning", the AI learns that "machine" is often followed by "learning", which helps it predict and generate coherent text.

Are responses to prompts always accurate?

All AI models are prone to making mistakes, usually referred to as hallucinations.

Tokenization and hallucinations in AI models like ChatGPT are subtly connected. Tokenization, the process of breaking text into smaller units, forms the basis for how the model understands and generates language. When this process mismatches the model’s training patterns, it can indirectly contribute to hallucinations—outputs that are fluent but factually wrong or entirely made up.

Hallucinations often stem from how the model interprets token patterns. Since models learn by predicting the next token, any noise, inconsistency, or bias in the training data can create misleading associations. For example, frequently co-occurring medical terms may cause the model to confidently generate incorrect diagnoses if those patterns aren’t well-grounded.

Tokenization also impacts the clarity of the model’s understanding. Models don’t understand words like humans do—they rely on token patterns. If tokenization fragments a word, especially a rare or technical one, the model might lose its meaning, resulting in distorted but plausible-sounding output.

The quote from this article sums up the problem;

[a Large Language Model] is a system for haphazardly stitching together sequences of linguistic forms it has observed in its vast training data, according to probabilistic information about how they combine, but without any reference to meaning (Bender et al., 2021, p. 617)

In short, while tokenization doesn’t directly cause hallucinations, it shapes how models process input and make predictions. Small issues can lead to significant errors. While clear, well constructed prompts can help reduce the possibility of hallucinations, it can't prevent them.

So remember, always verify any information that is generated by AI, regardless of how confident the response may sound!